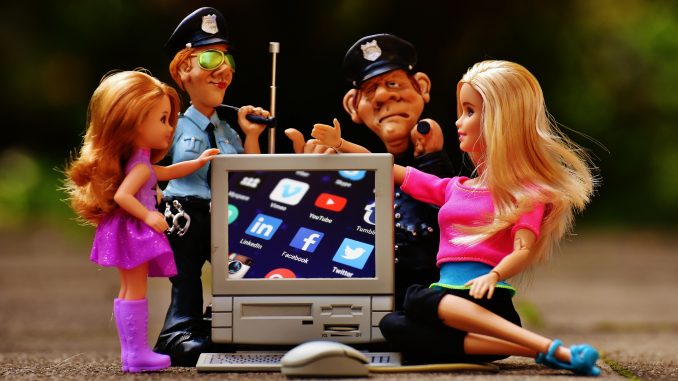

It is a question almost as old as the internet itself: how do you prevent children from being exposed to the dangerous element of the internet?

Recent statistics suggest that up to 68 per cent of children have seen sexual images online, while 27 per cent have received sexual images from people they have encountered on the internet. Almost a fifth of all 12-to-15 year olds have seen something they described as ‘worrying or nasty’ over social media. For years now, major technology companies have been criticised for not doing enough to protect youngsters. In recent years, that blame has seen a shift towards social media sites, where online bullying and cyber abuse has become rife.

Action is finally being taken by some of the major players, but still not at the speed many governments across the world would like to see.

Social media companies are being called upon to subscribe to a voluntary code of practice to tackle cyber-bullying, trolling and abuse, plus under-age access to pornography. They are also being asked to be more transparent on what percentage of reported content is taken down, how complaints are handled, the different genres of complaints – such as under-age content, religion or sexuality – and how the sites moderate content. They could also be called upon to help fund campaigns against abuse in plans rolled out earlier this month.

It is felt that a collaborative rather than coercive approach could prove more fruitful give the companies’ willingness to help.

“At Facebook, we take these issues very seriously,” said Antigone Davis, head of global safety policy at Facebook at the Child Dignity in the Digital World summit in Rome on October 11. But what practical measures are actually in place?

Davis pointed to the speed in which Facebook alert authorities to potentially illegal posts, not to mention their zero-tolerance policy on posts and pages which could promote sexual exploitation of children.

Facebook have also collaborated with Google and Microsoft to develop a number of programmes to make it easier to find sites which undermine child dignity. Twitter have also reacted by streamlining their procedures regarding harassment and abuse.

Crucially, they have also started using both automated and human responses to assess and act upon reported abuse. Last year, they also introduced their Safety Centre to give advice on how to stay safe online, with a particular section dedicated to teenagers.

While not a social media site, Google does at least appear to be playing a pro-active role in the debate. Calling the search engine giant ‘part of the ecosystem of the internet’, Google’s public policy and government relations manager Katie O’Brien highlighted the safer search function on YouTube, plus YouTube Kids for primary-aged school children for age-appropriate content.

Given the vast profits tech giants can generate, serious questions remain over their contribution to the issue. In their defence, social media in particular remains a new phenomenon, and protecting those who use it the most remains both challenging and unchartered territory.

Leave a Reply